[AAIL21] Patching interpretable And-Or-Graph knowledge representation using augmented reality

Abstract

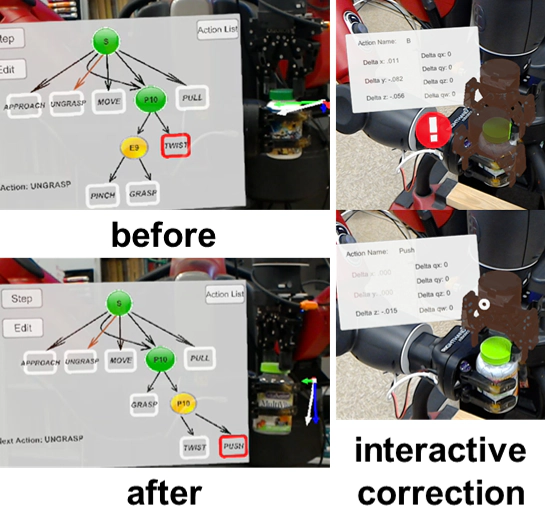

We present a novel augmented reality (AR) interface to provide effective means to diagnose a robot’s erroneous behaviors, endow it with new skills, and patch its knowledge structure represented by an And-Or-Graph (AOG). Specifically, an AOG representation of opening medicine bottles is learned from human demonstration and yields a hierarchical structure that captures the spatiotemporal compositional nature of the given task, which is highly interpretable for the users. Through a series of psychological experiments, we demonstrate that the explanations of a robotic system, inherited from and produced by the AOG, can better foster human trust compared to other forms of explanations. Moreover, by visualizing the knowledge structure and robot states, the AR interface allows human users to intuitively understand what the robot knows, supervise the robot’s task planner, and interactively teach the robot with new actions. Together, users can quickly identify the reasons for failures and conveniently patch the current knowledge structure to prevent future errors. This capability demonstrates the interpretability of our knowledge representation and the new forms of interactions afforded by the proposed AR interface.