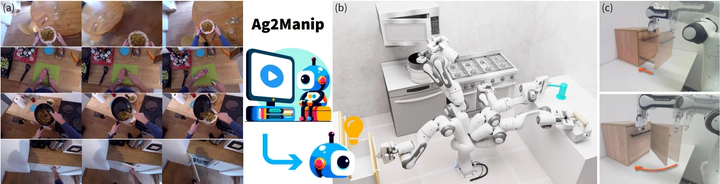

[IROS24] Ag2Manip: Learning Novel Manipulation Skills with Agent-Agnostic Visual and Action Representations

Abstract

Autonomous robotic systems capable of learning novel manipulation tasks are poised to transform industries from manufacturing to service automation. However, current methods (e.g., VIP and R3M) still face significant hurdles, notably the domain gap among robotic embodiments and the sparsity of successful task executions within specific action spaces, resulting in misaligned and ambiguous task representations. We introduce Ag2Manip (Agent-Agnostic representations for Manipulation), a framework aimed at addressing these challenges through two key innovations: (1) an agent-agnostic visual representation derived from human manipulation videos, with the specifics of embodiments obscured to enhance generalizability; and (2) an agent-agnostic action representation abstracting a robot’s kinematics to a universal agent proxy, emphasizing crucial interactions between end-effector and object. Ag2Manip has been empirically validated across simulated benchmarks, showing a 325% performance increase without relying on domain-specific demonstrations. Ablation studies further underline the essential contributions of the agent-agnostic visual and action representations to this success. Extending our evaluations to the real world, Ag2Manip significantly improves imitation learning success rates from 50% to 77.5%, demonstrating its effectiveness and generalizability across both simulated and real environments.