Abstract

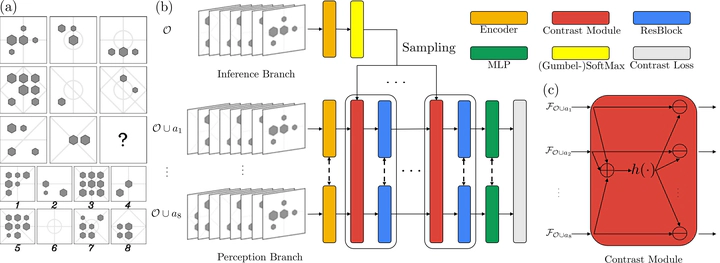

‘Thinking in pictures,’ [1] i.e., spatial-temporal reasoning, effortless and instantaneous for humans, is believed to be a significant ability to perform logical induction and a crucial factor in the intellectual history of technology development. Modern Artificial Intelligence (AI), fueled by massive datasets, deeper models, and mighty computation, has come to a stage where (super-)human-level performances are observed in certain specific tasks. However, current AI’s ability in ’thinking in pictures’ is still far lacking behind. In this work, we study how to improve machines’ reasoning ability on one challenging task of this kind: Raven’s Progressive Matrices (RPM). Specifically, we borrow the very idea of ‘contrast effects’ from the field of psychology, cognition, and education to design and train a permutation-invariant model. Inspired by cognitive studies, we equip our model with a simple inference module that is jointly trained with the perception backbone. Combining all the elements, we propose the Contrastive Perceptual Inference network (CoPINet) and empirically demonstrate that CoPINet sets the new state-of-the-art for permutation-invariant models on two major datasets. We conclude that spatial-temporal reasoning depends on envisaging the possibilities consistent with the relations between objects and can be solved from pixel-level inputs.