[AAAI19] Mirroring without Overimitation: Learning Functionally Equivalent Manipulation Actions

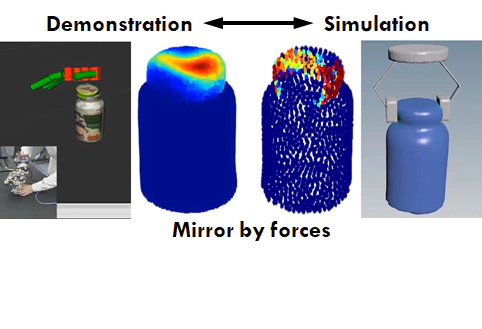

A robot mirrors human demonstrations with functional equivalence by inferring the action that produces similar force, resulting in similar changes of the physical states.

A robot mirrors human demonstrations with functional equivalence by inferring the action that produces similar force, resulting in similar changes of the physical states.Abstract

This paper presents a mirroring approach, inspired by the neuroscience discovery of the mirror neurons, to transfer demonstrated manipulation actions to robots. Designed to address the different embodiments between a human (demonstrator) and a robot, this approach extends the classic robot Learning from Demonstration (LfD) in the following aspects: (i) It incorporates fine-grained hand forces collected by a tactile glove in demonstration to learn robot’s fine manipulative actions; ii) Through model-free reinforcement learning and grammar induction, the demonstration is represented by a goal-oriented grammar consisting of goal states and the corresponding forces to reach the states, independent of robot embodiments; iii) A physics-based simulation engine is applied to emulate various robot actions and mirrors the actions that are functionally equivalen to the human’s in the sense of causing the same state changes by exerting similar forces. Through this approach, a robot reasons about which forces to exert and what goals to achieve to generate actions (i.e., mirroring), rather than strictly mimicking demonstration (i.e., overimitation). Thus the embodiment difference between a human and a robot is naturally overcome. In the experiment, we demonstrate the proposed approach by teaching a real Baxter robot with a complex manipulation task involving haptic feedback—opening medicine bottles.