Abstract

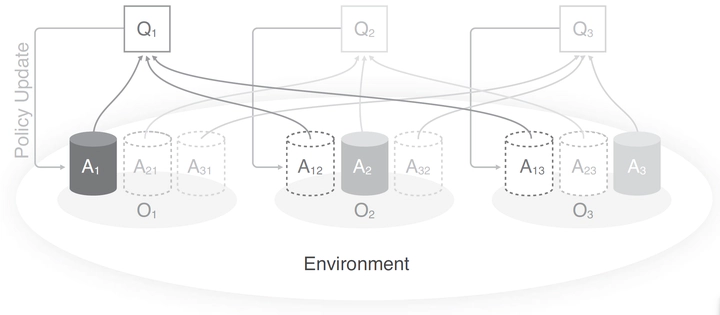

Coordinated hunting is widely observed in animals, and sharing rewards is often considered a major incentive for its success. While current theories about the role played by sharing in coordinated hunting are based on correlational evidence, we reveal the causal roles of sharing rewards through computational modeling with a state-of-the-art Multi-agent Reinforcement Learning (MARL) algorithm. We show that counterintuitively, while selfish agents reach robust coordination, sharing rewards undermines coordination. Hunting coordination modeled through sharing rewards (1) suffers from the free-rider problem, (2) plateaus at a small group size, and (3) is not a Nash equilibrium. Moreover, individually rewarded predators outperform predators that share rewards, especially when the hunting is difficult, the group size is large, and the action cost is high. Our results shed new light on the actual importance of prosocial motives for successful coordination in nonhuman animals and suggest that sharing rewards might simply be a byproduct of hunting, instead of a design strategy aimed at facilitating group coordination. This also highlights that current artificial intelligence modeling of human-like coordination in a group setting that assumes rewards sharing as a motivator (e.g., MARL) might not be adequately capturing what is truly necessary for successful coordination.